In a Q&A session at a student run event a couple of years ago I was asked to answer the question "Name one thing you're really good at". Most of the audience knew me, either in person, or knew about my reputation/job titles. I assumed they were expecting me to name a skill that has propelled me through my career. It was not a question I was anticipating or was particularly prepared for - however in a public "lightbulb moment" I answered that I was good at "Failing". The room went quiet. You could hear a pin drop as my audience started to process my one word answer. I let that word sink in for a moment and then elaborated.

Firstly I discussed the fact that I talk to all sorts of students in all sorts of situations. Some have just "failed" a test or exam and are drawing all sorts of conclusions about what that implies. The fact I am a Professor does not make me immune to "failure". My own student transcript is full of high grades. However my mark on my very first University test was definitely not in A+ territory. If I had I let that define me life would have been very different! I'd skipped first year University classes in a "direct entry" program and started University study at second year level. I used the low mark on my my first test as fuel to figure out what it would would take to truly succeed in that environment.

The version of my CV which I would normally share when applying for a grant, promotion or an award lists a whole range of academic/professional successes - papers published, grants won, awards received. However what most people don't get to see is the file folders of unfunded grant applications, the paper reviews where I could readily believe the reviewer must be referring to someone else's paper, or the award nomination material for awards that went to other deserving applicants.

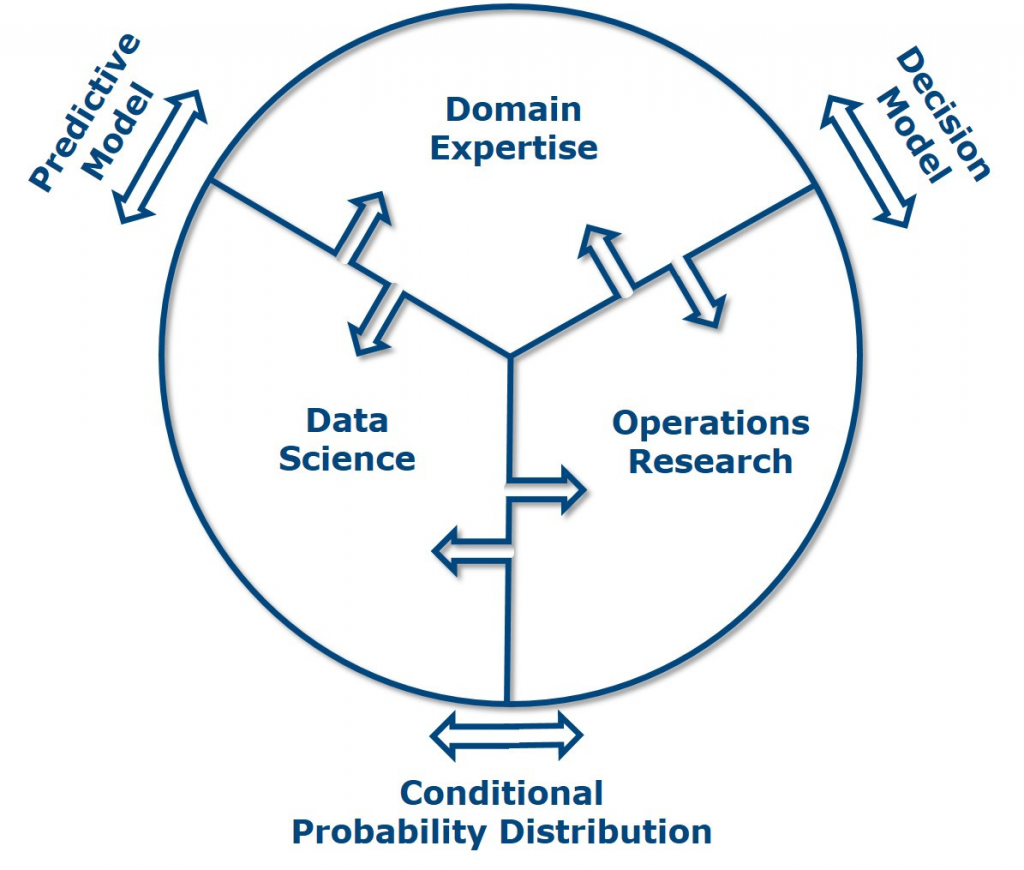

The successes on my CV are however built on a string of "failures". Telling a group of students that I am good at failing was a statement about the resilience needed to pursue an academic career. Being "good at failing" means that I've always made a point of learning everything I can from situations where the outcome may not have been defined as a perfect "success". If success is an iceberg, then the failures that most people don't get to see are below the waterline - and are invisble to most people. For some thoughts on creating a "CV of failures" check out this post on the GradLogic blog.

I'd encourage anyone in an academic environment to embrace failure!

“The Iceberg Illusion” illustration is by Sylvia Duckworth used under Creative Commons license.